The state of AI agents

The AI Engineering World’s fair took place last week in San Francisco. After seeing a few talks live and watching a few of the recordings, I had a few takeaways about the current state of AI agents.

The Rise of Ambient Agents

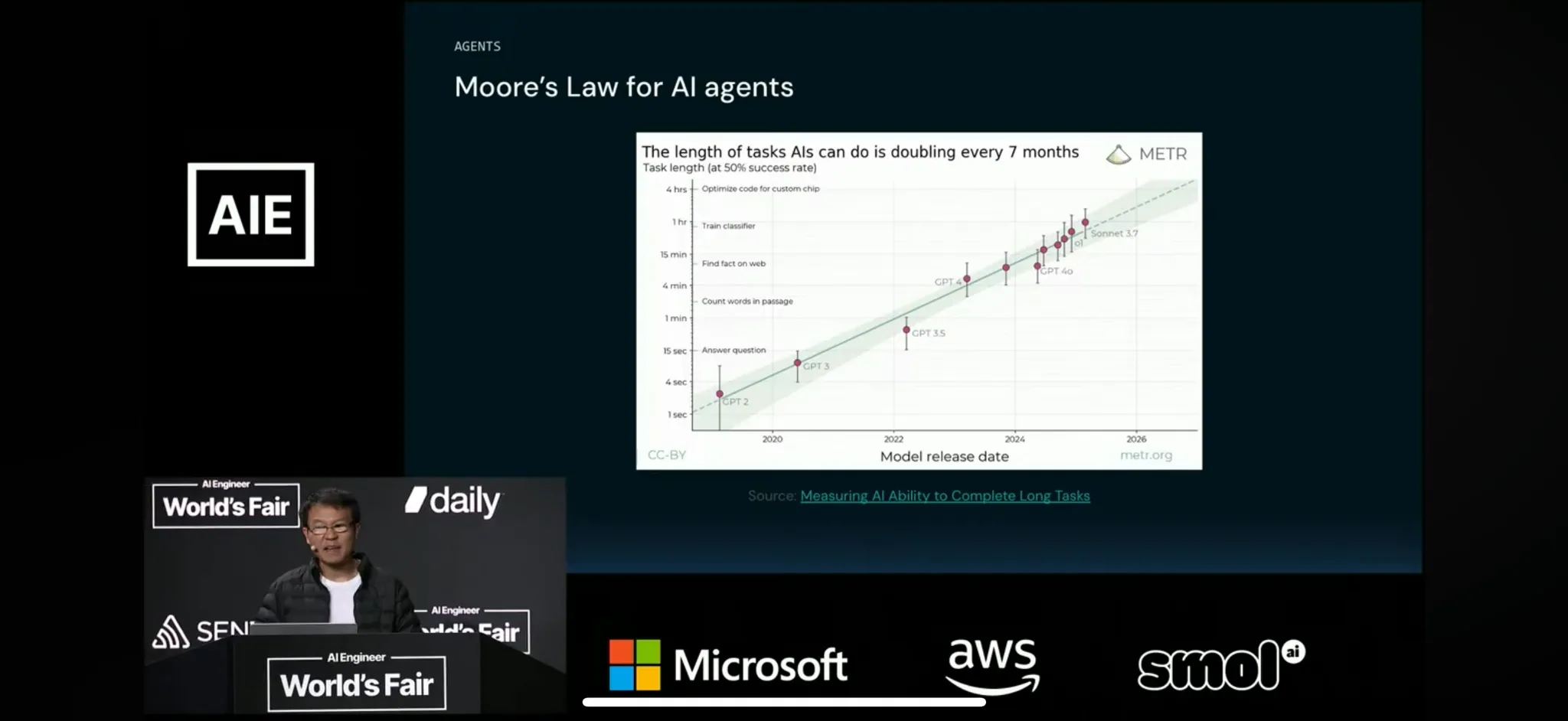

“Ambient” agents are becoming more common. They perform tasks autonomously and asynchronously “in the background”, often without a chat interface. Scott Wu of Cognition showed a key driver for this: the length of tasks that AI can perform autonomously is doubling every ~7 months.

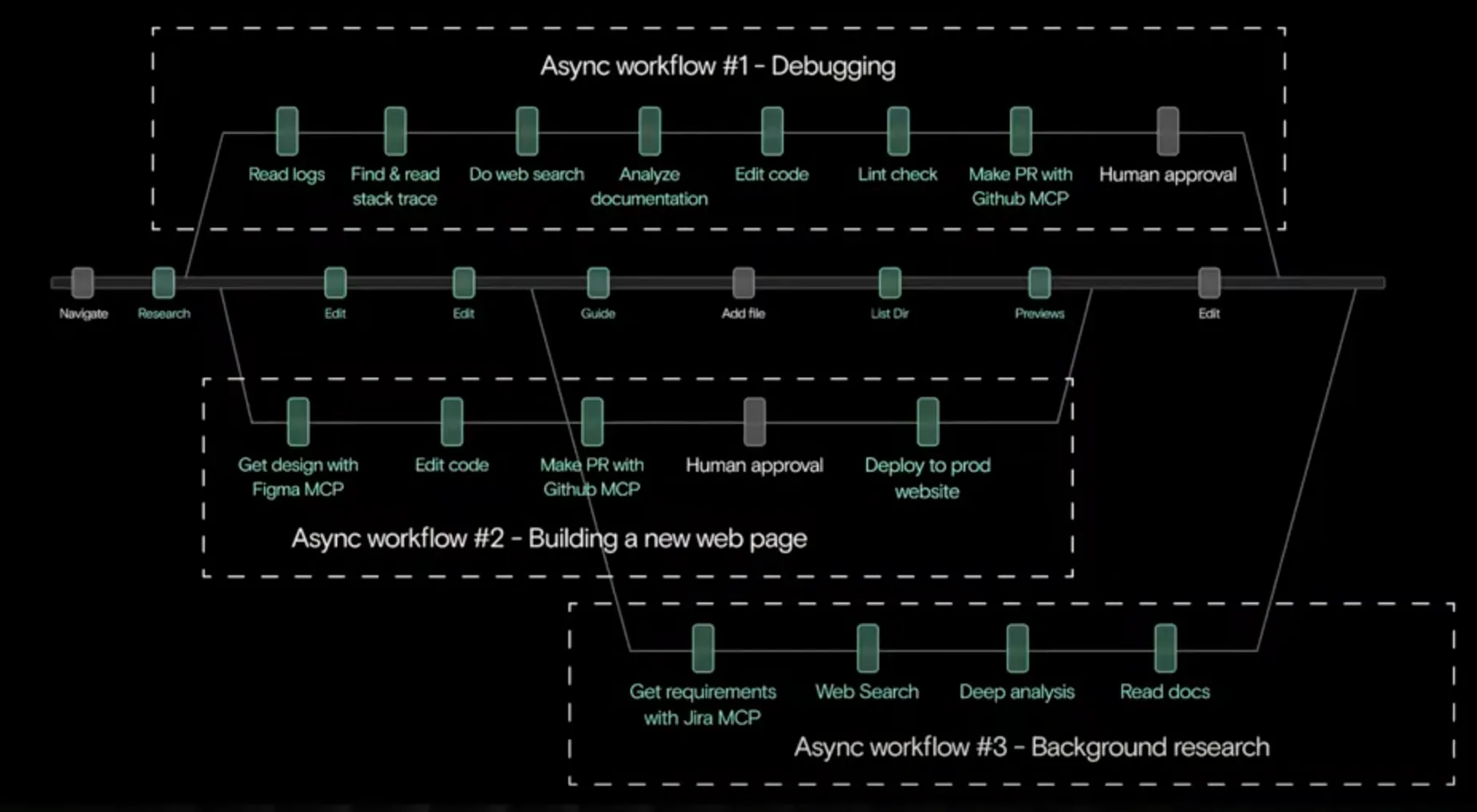

Scott outlined the history of Devin, Cognition’s coding agent. Since last summer, Devin has been able to fix bugs or implement features based on user requests via Slack or other surfaces. Kevin Hou from Windsurf also discussed the transition from human-in-the-loop synchronous workflows that are ~80:20 agent:human to “ambient” asynchronous workflows that are autonomous and only ask the human for final approval.

Recent podcasts with Michael Truell (Cursor) have touched on this theme. OpenAI also launched their Codex agent, which can connect to GitHub and manage asynchronous coding tasks.

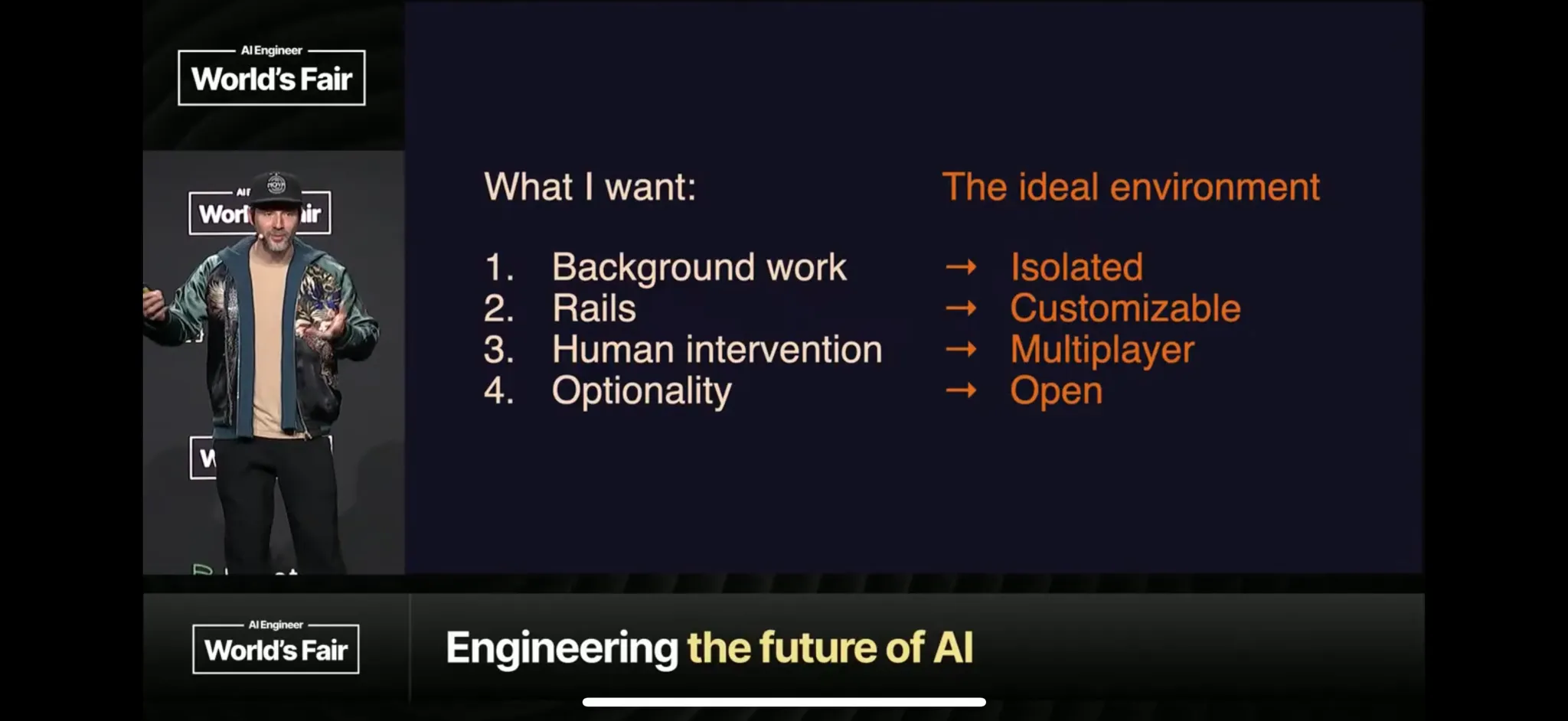

Solomon Hykes summarized the environment he wants for ambient agents: do background work for me, with ability to easily add constraints (e.g., tools to define the action space, secrets, etc.), with “multiplayer” mode (human in the loop) so that I can get alerts if the agents needs my attention, and with discoverability so that I can choose different agents to work with easily.

The Bitter Lesson + Agent UX

The right agent UX is a big question right now. Boris Cherny of Anthropic gave a talk on Claude Code. His view on agent UX is influenced by the bitter lesson: general methods that scale with computation and data outperform more specialized approaches that rely on hand-crafted inductive biases. Boris mentioned a corollary to this: general things around the model also tend to win.

With models improving quickly, Claude Code is general, minimalistic, and unopinionated. It runs in terminal without a UI. At Anthropic demo day, Boris mentioned that that people may not be using IDEs by the end of this year.

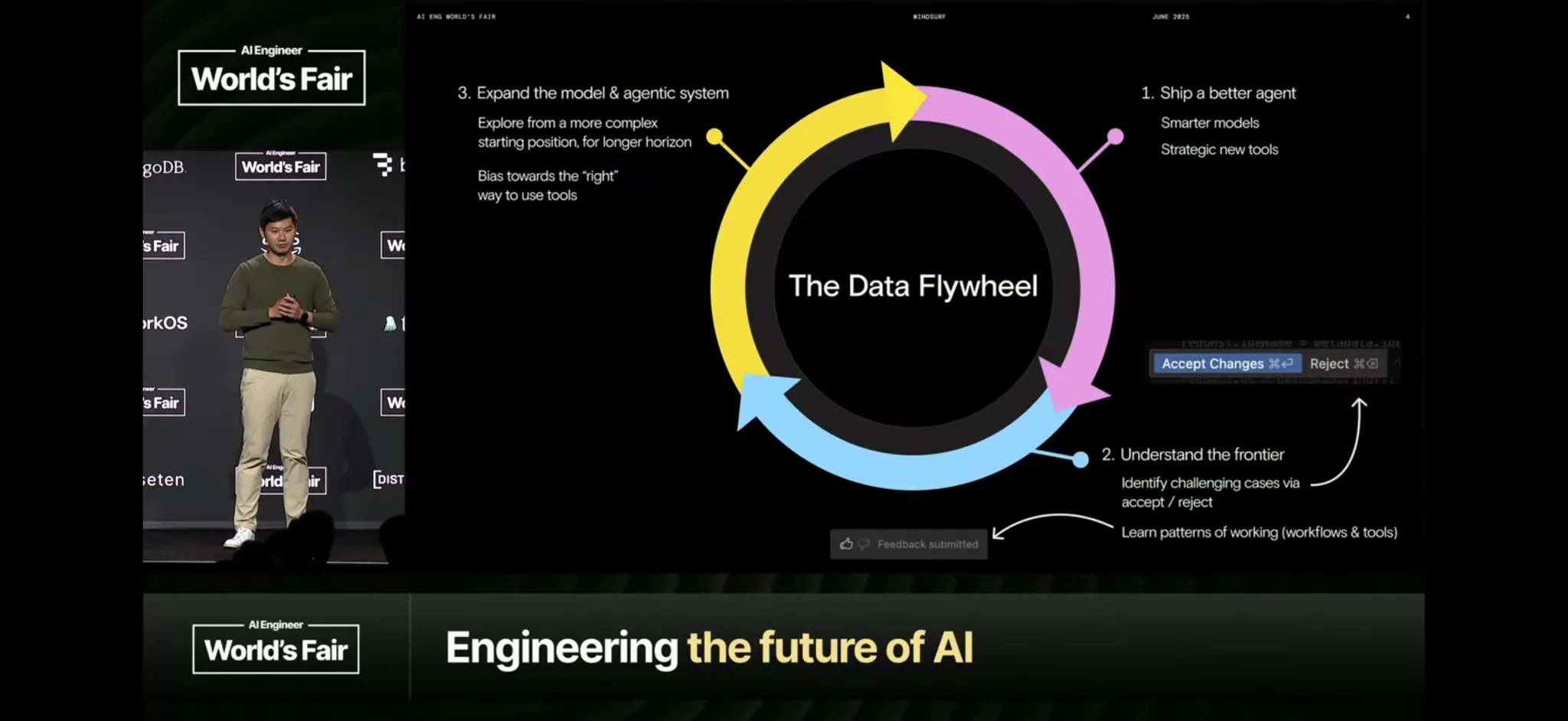

Kevin Hou from Windsurf gave an alternative view: build an opinionated UI (IDE, in the case of coding) tailored to the workflow of today and use it to collect data. The data can be used to train models for that specific task, as shown in the data flywheel underpinning Windsurf’s SWE-1 model.

Agent Training

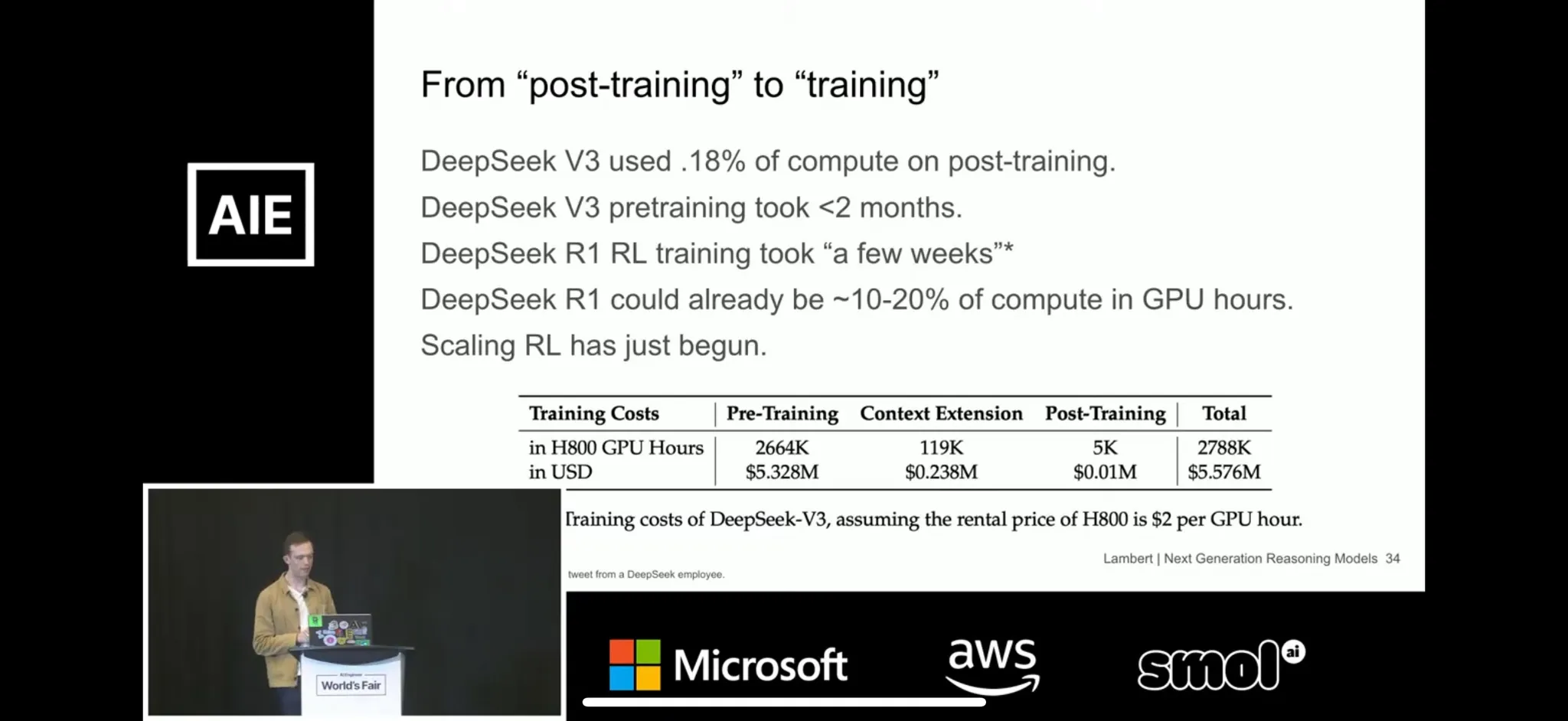

Agents are being trained with task-specific reinforcement learning (RL). Nathan Lambert gave an overview of Reinforcement Learning with Verifiable Rewards (RLVR) and made the point that we are early on this scaling curve: OpenAI increased the RL compute by ~10x from o1 to o3. The compute that has been historically used in RL “post-training” is much less than pre-training.

A central question is whether RLVR will work on “non-verifiable tasks” (beyond code and math). Will Brown mentioned that a well-curated LLM-as-judge reward function is a promising approach.

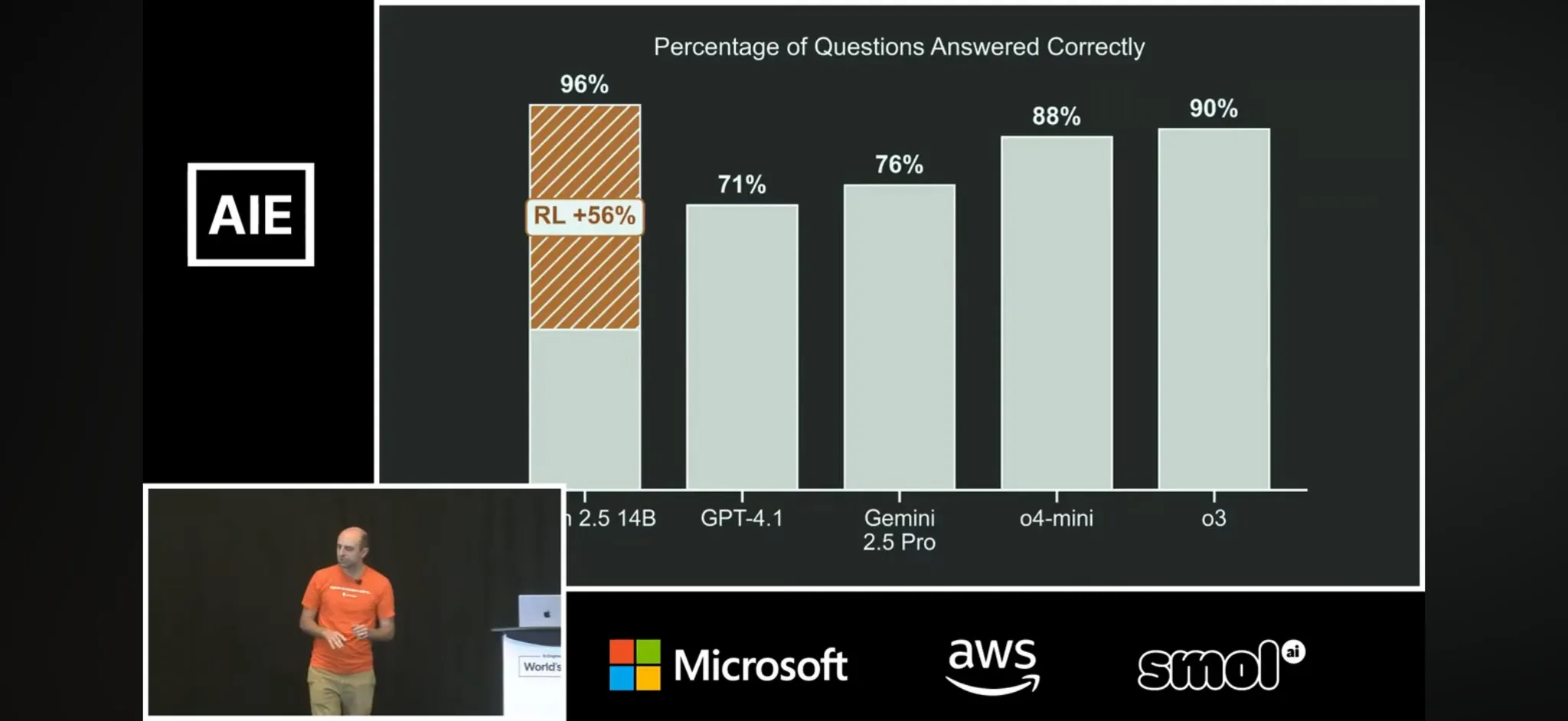

Kyle Corbitt from Open Pipe discussed Art-E, which is a nice example of this. They built an agent for e-mail QA using RLVR (see the blog here). It used the Enron email dataset, synthetically generated QA pairs from ~100k emails, and used LLM-as-judge for answer correctness as the reward function.

They trained Art-E to answer questions about e-mails using Qwen-2.5-14b as the base model with e-mail tools. Training used GRPO, the project took ~1 wk of work, and it cost $80 of compute. The central point is that Art-E can outperform frontier models with prompting on this narrow task.

Agent Tools

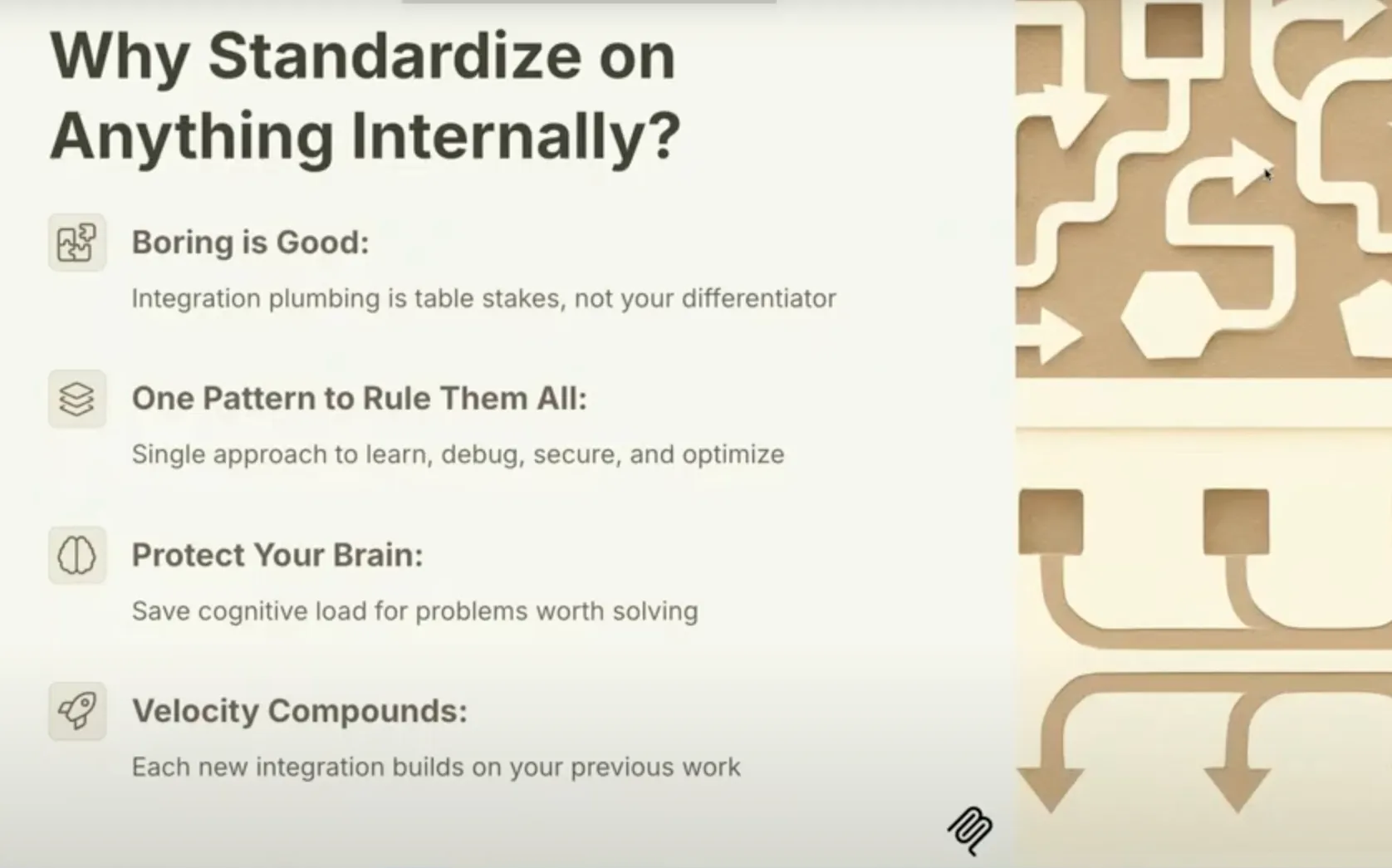

Agents need the right tools to be effective. It’s not hard to define tools and bind them to an LLM using the various model SDKs. So, why is a protocol like MCP useful? John Walsch gave a talk on the practical origins of MCP within Anthropic.

LLMs got good at tool calling. Everyone started writing tools without coordination, resulting in duplication and many custom endpoints for each use-case. Inconsistent interfaces confused developers. Duplicated functionality created maintenance challenges.

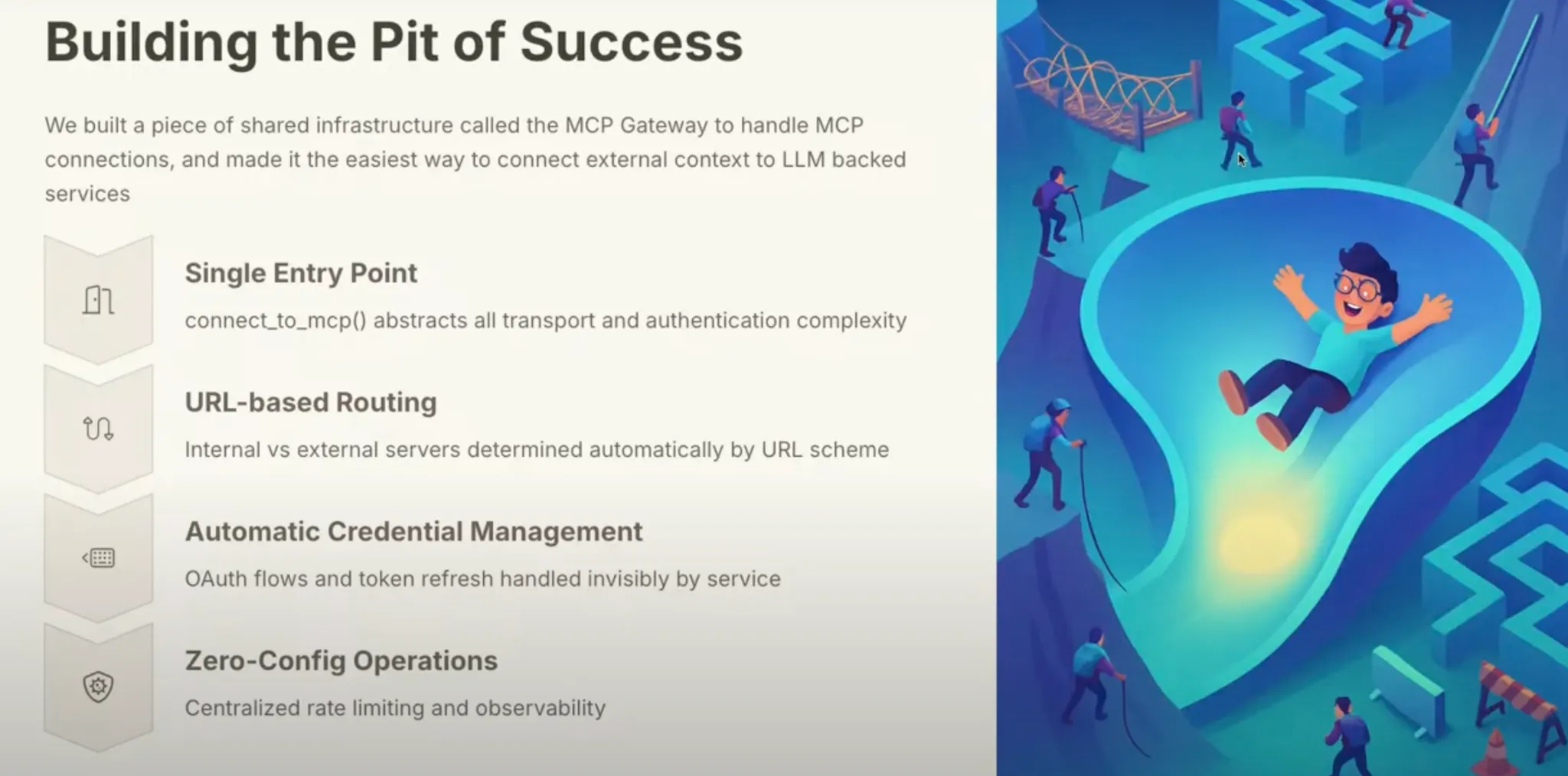

MCP provides a standard protocol to address these problems. But, an interesting lesson from the talk was the need to build internal tooling around the MCP protocol: Anthropic built an internal MCP Gateway to handle MCP connections and made it the easiest way to connect Claude to context or tools. It abstracts all transport and auth, and provides observability. This gives a central point of ingress / egress for all model context, allowing for auditing / policy enforcement and visibility into what models are trying to do.

Agent Memory

Scott Wu made an important point: if you tell an agent how to do something, you want it to remember that for next time! Devin, Windsurf and Cursor all have memory. In his talk on Claude Code, Boris Cherny gave some useful examples of saving memories to various CLAUDE.md files.

The UX for memory is tricky, though. When should the agent fetch memories? Simon Willison gave an example of the challenge: memory can mean loss of control for the user. He highlighted a case where GPT-4o injected location into an image based upon his memories, which was not desired.

Also, memory systems today are external to the model. Nathan Lambert brought up the topic of continual learning, which has been mentioned elsewhere recently. Human feedback may be incorporated directly into the model in the future rather than using external memory systems.

Conclusion

At least three themes emerged:

Autonomy is accelerating. Agents can now work on tasks for hours or days, doubling their capability every 7 months. This is pushing us toward “ambient” agents that work behind the scenes.

The tooling ecosystem is maturing. Standards like MCP solve coordination problems, while memory systems are becoming essential for agents to learn from past interactions.

Training methods are evolving. Reinforcement learning with verifiable rewards is showing promise beyond just code and math, potentially transforming how we build agents in non-verifiable domains.

The future of AI agents looks less like improved chatbots and more like assistants that handle complex work autonomously.